A3 H8 Network Security

Most security problems are intentionally caused by malicious people

trying to gain some benefit or harm someone.

Network security problems can be divided roughly into four

intertwined areas: secrecy, authentication, nonrepudiation and

integrity. Secrecy has to do with keeping information out of the

hands of unauthorized users. Authentication deals with determining

whom you are talking to before revealing sensitive information.

Nonrepudiation deals with signatures: how do you prove that a

customer really placed an electronic order for 10000 pieces at $10

per piece when he later claims the price was $8? Integrity deals

with knowing that the received message was not modified in transit.

Where in the protocol stack does security belongs? Every layer

has something to contribute. In the physical layer transmission lines

can be enclosed in sealed tubes containing argon gas at high

pressure, drilling a hole in it will release some gas and drop the

pressure sufficiently to trigger an alarm. It was actually used in

some military applications.

In the data link layer, packets on a point-to-point line can be

encoded as they leave one machine and decoded as they enter the

other. When packets have to transverse routers, it is still

vulnerable to attacks from within the router. Also it does not allow

some sessions to be protected (e.g. on-line purchases by credit

cards) and others not. Nevertheless, link encryption can be

added to any network easily and is often useful. In the network

layer, firewalls can be used to keep packets in or out as desired. In

the transport layer, entire connections can be encrypted, end to end,

that is: process to process.

Although these solutions help with the secrecy issues and many

people are working to improve them, none of them solve the

authentication or nonrepudiation problem in a sufficiently general

way.

8.1 Cryptography

8.1.1 Introduction to Cryptography

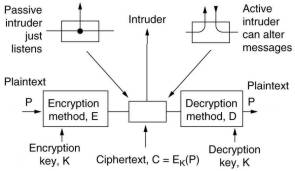

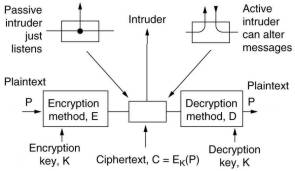

The messages to be encrypted, the plaintext, are transformed by

a function Ek that is parameterized by a key K,

producing the ciphertext. A passive intruder can

read the ciphertext, an active intruder can insert his own

messages or modify legitimate messages. The receiver decodes the

ciphertext using a function DK:

DK

( EK (P) ) = P

The art of devising ciphers, cryptography, and breaking

them, cryptanalysis, is collectively known as cryptology.

D and E are mathematical functions of 2 parameters, text and

the key. A fundamental rule is that one must assume that the

functions are known (Kerckhoff's principle). The amount of time to devise and test a new

algorithm every time the old one is compromised, makes it

impractical to keep it secret. By publicizing the algorithm, the

cryptographer also gets free consulting from a large number of

academic cryptologists eager to break the system so they can publish papers.

The key consists of a relatively short string that selects one

of the many potential encryptions. The work factor for breaking the

system by exhaustive search through the key space is exponential in

the key length. For conventional ciphers the encryption key and the

decryption key are the same or easily derivable from each other. This

made the key distribution the weak link in most cryptosystems, if an

intruder could steal the key in use, the system was worthless.

From the cryptanalyst's point of view there are three principal

variations: no plaintext available (ciphertext only), matched

ciphertext and plaintext available (known plaintext),

encryption of own plaintext available (chosen plaintext). It

is naive to assume that a cipher is secure when it can withstand a

ciphertext only attack. In many cases the cryptanalyst can make a

good guess at parts of the plaintext.

8.1.2 Substitution Ciphers

In a substitution cipher each letter or group of letters is replaced by another letter or group of letters.

A problem is that it easily can be broken by using statistical properties of natural languages.

In English e is the most common letter, followed by t,o,a, etc. The most common digrams are th, in, er, etc.

For trigrams the sequence is the, ing, and, ion, etc.

Further one can look for words, that are likely to occur in a message, like financial.

It has a repeated i with 4 letters in between, which can be searched for.

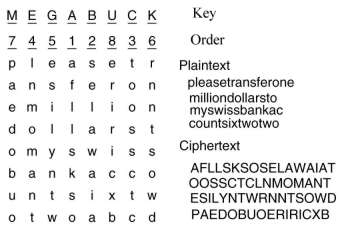

8.1.3 Transposition Ciphers

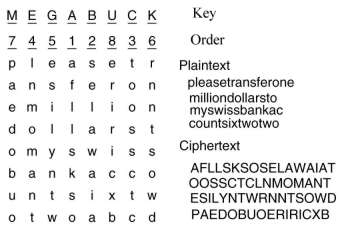

A transposition cipher reorders the letters but does not disguise them.

The key is a word or phrase not containing any repeated letters. Its purpose is to number the columns,

column 1 being under the letter closest to the start of the alphabet, and so on.

Also this code can be easily broken.

8.1.4 One-Time Pads

Constructing an unbreakable cipher is easy. Convert the plaintext into a bit string, for example

by using its ASCII representation. Then choose a random bit string with the same length as the key.

Then use the XOR of these two strings as the ciphertext.

This one-time pad method is unbreakable because all possible plaintexts of the given length

are equally likely. Of coarse it has the disadvantage that the key is very long and cannot be memorized,

so its distribution is unsafe.

Interestingly there might be a solution to this problem. It uses the quantum mechanical properties

of single photons, namely that they can be polarized. A bit is send over a fiber cable as a single photon, a qubit

with the information encoded in the polarization. A random sequence of 2 sets of polarization filters

(rotated at 45 degrees) is used for sending and receiving. Using a certain protocol for exchanging

in plaintext the sequence of polarization filters used, a not interceptable one-time pad can be exchanged.

The idea has been shown to operate over distances of 60 km of fiber, but still needs a lot of technical development.

8.1.5 Two fundamental Cryptographic Principles

All encrypted messages must contain some redundancy, that is, information not needed to understand the message.

It should be used to check the validity of the message, like a CRC code would do. This redundancy is needed

to prevent active intruders from sending garbage and tricking the receiver into decrypting the garbage and acting on the

"plaintext". However the redundancy could make it easier for passive intruders to break the system, so there is some tension here.

Some measures should be taken to ensure that each message received can be verified as being fresh,

that is, sent very recently. Otherwise an active intruder could just play back old messages, tricking the receiver

into repeating certain actions.

8.2 Symmetric-key Algorithms

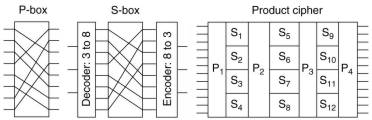

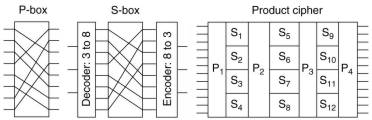

Modern conventional ciphers use the traditional transpositional and substitution methods, but

with very complex algorithms. They can be implemented in hardware for speed or in software for flexibility.

To the left is a P-box (permutation box)performing a transposition. In the middle is a S-box performing

a substitution. To the right is a combination to form a product cipher converting a 12 bit input

to a 12 bit output. The S-boxes are split to operate each on 3 bit input, thus having 23=8 crossing wires,

in stead of 212=4096 crossing wires. The P and S boxes are cascaded to generate a complex algorithm

Current hardware implementations have at least 18 physical stages in stead of the 7 shown here.

A software implementation is programmed as a loop with at least 8 iterations, called rounds.

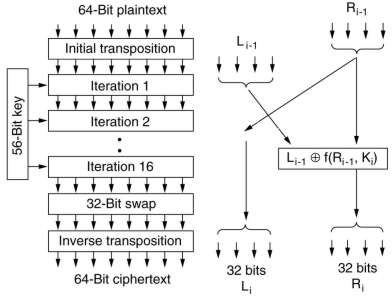

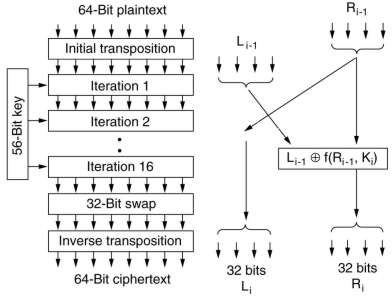

8.2.1 DES - Data Encryption Standard

In January 1977, the US government adopted a cipher developed by IBM as its official standard for unclassified information, called

DES. The original cipher used a 128-bit key, but it was changed to a

56-bit key. Many people suspected that this was done to allow NSA

(National Security Agency) to break the code, but no organization

with a smaller budget could.

DES is basically a monoalphabetic substitution cipher using a 64-bit

character. Whenever the same 64-bit plaintext block goes in, the

same 64-bit ciphertext block comes out.

The first stage is a key independent transposition on the

64-bit plaintext. The last stage is the exact inverse, before that

is an exchange of the leftmost with the rightmost 32 bits. The

remaining 16 stages are functionally identical but are

parameterized by different functions of the key.

The left output of an iteration stage is simply a copy of the

right input. The right output is the exclusive OR of the left

input and a function of the right input and the key for this

iteration. All the complexity lies in this functions which

consists of 4 sequential steps.

|

Triple encryptions is also used (double encryption is probably not much

more secure than single encryption). The EDE mode requires 2 keys, the 112 bits key was considered

secure enough.

By setting K1=K2 one can speak with systems

which only use 1 key.

|

8.2.2 AES - Advanced Encryption Standard

For the new US standard a competition was setup and in 2001 Rijndael was selected.

It is also a monoalphabetic substitution cipher with a 128 bit block and a key length of 128 or 256 bits.

From a mathematical perspective Rijndael is based on Galois field theory which gives it some provable

security properties. All computing operations operate on full bytes and a good software implementation

on a 2 GHz machine can achieve a fast encryption rate of 700 Mbps

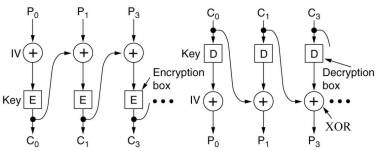

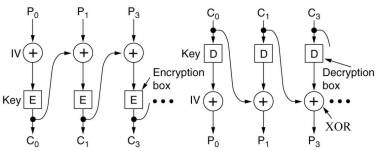

8.2.3 Cipher codes

The fact that DES and AES is a monoalphabetic substitution cipher can be

exploited by a cryptanalyst to break it. All block ciphers can be chained in various ways

to thwart this kind of attack.

In cipher block chaining the first block ( 64 bits of DES) is first XOR'ed with a randomly

chosen initialization vector (IV), that is transmitted (in plaintext) along with the

ciphertext. Each next block is XOR'ed with ciphertext of the

previous block before being encoded with DES.

8.2.4-8.2.5 Not for this course

8.3 Public key algorithms

Historically, distributing the keys has always been the weakest link in most cryptosystems.

It was always taken for granted that the encryption and decryption key were the same or could easily be derived

from each other.

In 1976 a radically new kind of cryptosystem was proposed, using different keys

for encryption (the public key) and decryption (the private key

). The keys are extremely difficult to derive

from each other. A user generates both keys, publishes the public key

so anyone can encrypt a message for him, but keeps the private key

really secret so only he can decrypt the message.

8.3.1 The RSA algorithm

This public-key algorithm is named after the initials of its 3

inventors. It consists of the following steps:

- Choose two large primes, p and q (typically 1024 bits)

- Compute n = p * q and z = (p-1) * (q-1)

- Choose a number d that is relatively prime to z

- Find e such that (e*d) mod z = 1

- Divide the plaintext into blocks of length k, with 2k

< n (largest k)

- C = Pe mod n

- P = Cd mod n

The public key is thus the pair (e,n) and the private key is the

pair (d,n). The security of the method is based on the difficulty of

factoring the publicly known large number n into a product of 2

primes. Note that RSA is also a monoalphabetic substitution cipher,

so cipher block chaining can be used also.

RSA is too slow for actually encrypting large volumes of data.

Most RSA based systems use it to distribute one-time session keys for

use with DES or similar algorithms.

8.4 Digital signatures

This is intended to replace handwritten signatures on paper.

Basically it is a system by which one party can send a signed message to another party in such a way that:

- The receiver can verify the claimed identity of the sender (authentication)

- The sender cannot later repudiate the contents of the message (nonrepudiation)

- The receiver cannot possibly have concocted the message himself (integrity)

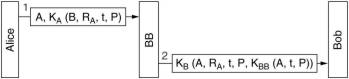

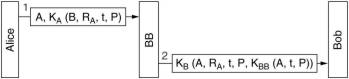

Using symmetric key algorithms this can be accomplished by having a trusted authority (Big Brother)

having the secret keys of all participants.

User A sends then to BB its encrypted information, build up from its plaintext, the identity

of user B, a random number and a timestamp. BB decrypts this message and sends user B a message encrypted with the key of B.

It contains the identity of A; the plaintext, random number and timestamp send by A;

and information encrypted by BB with its own secret key.

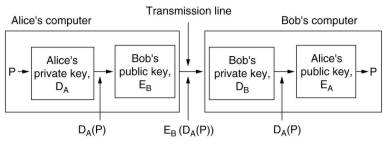

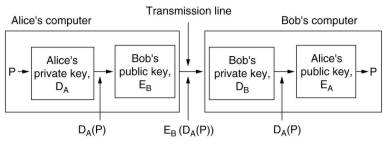

With public-key algorithms digital signatures can be achieved, provided they have the property

that E(D(P))=P, besides the usual property that D(E(P))=P. RSA is an example.

This scheme has problems in case A's private key becomes known to others or A changes the keys.

One criticism of the above signature methods is that they couple two distinct functions: authentication and secrecy.

A message digest is an authentication scheme that does not require encrypting the entire message.

It uses a hash function that computes a fixed-length bit string from an arbitrarily long piece of plaintext.

To be used as a MD the hash function needs to have four important properties:

- Given P, it is easy to compute MD(P)

- Given MD(P), it is effectively impossible to find P

- Given P no one can find P' such that MD(P')=MD(P). this requires the hash to be at least 128 bits.

- A change to the input of even 1 bit produces a very different output, this requires the hash to mangle the bits very thoroughly.

A widely used MD is MD5. It padds the message to a length of 448 bits modulo 512 and then appends the

original length of the message as an 64 bit integer. It initializes the 128-bit hash output and then it mixes

each input block of 512 bits with the hash in 4 rounds.

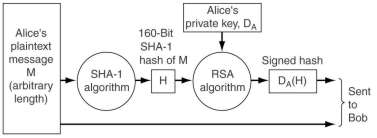

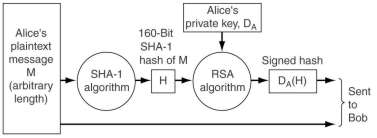

SHA-1 is the other major MD. Like MD5 it processes input blocks of 512 bits but it generates a 160-bit hash

(5 integers of 32 bits).

User B can validate the received plaintext M by calculating H=SHA-1(M), which should be equal to EADA(H),

where the encoding is done with the public key of A. This uses the RSA property that E(D(P))=P, besides the property that D(E(P))=P.

8.5 Not for this course

8.6 Communication security

8.6.1 IPsec

IETF has known for years that security was lacking in the Internet.

But where to put it?

Most security experts believe that to be rally secure, encryption and integrity checks have to be end to end,

thus in the application layer. But this require changing applications to make them security aware.

The next best layer would then be the network layer.

The opposite view is that users do not understand security and will not be capable of using it correctly.

Thus the network layer should authenticate and/or encrypt packets without the users being involved.

This view gained enough support that a network layer security protocol was defined. It is called

IPsec, IP security.

IPsec provides multiple services: secrecy, data integrity and protection from replay attacks.

They are based on symmetric-key cryptography because high performance is crucial. It also allows for

multiple algorithms allowing newer ones in the future when older ones are broken or not secure enough any more.

Also multiple granularities are provided making it possible to protect a single TCP connection, all traffic between

a pair of hosts or of secure routers.

IPsec, although in the IP layer, is connected oriented. It needs to be, because to have any security

a key must be established and used for some period of time. In essence this is a kind of connection

which is called a security association (SA) in IPsec.

How the key is established is described by the ISAKMP protocol.

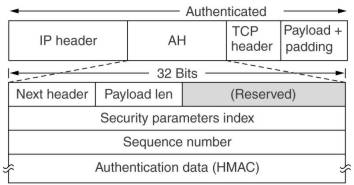

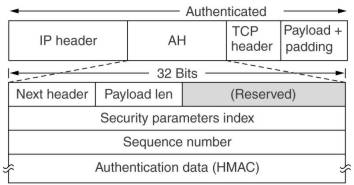

A first new header provided is Authentication Header (AH). It provides integrity and antireplay security,

but not secrecy. In IPv4 it is placed after the IP header of which the Protocol field is changed to 51 and the

original value is placed in the Next header field. In IPv6 it is just another extension header.

Shown here is AH in the so called transport mode. Also tunnel mode is possible in which the entire

original IP packet, is encapsulated in the body of a new IP packet.

This is useful when the tunnel ends at a location other than the final destination, e.g. a firewall.

The security parameters index is the connection identifier, it points to a particular record in a database of the receiver.

This contains the shared (by sender and receiver) key used on this connection and other information about the connection.

The sequence number may not wrap around, if all 232 are used, a new SA must be established.

The authentication data (HMAC) contains a hash over the packet and the shared key.

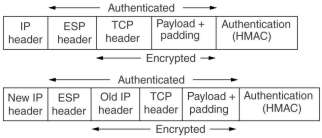

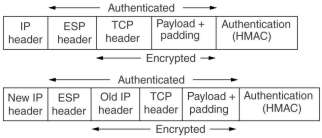

The alternative is the ESP (Encapsulating Security Payload) header, which provides also encryption

in transport mode and tunnel mode. It contains an Initialization Vector used in the encryption besides the

security parameters index and the sequence number. The HMAC comes now after the payload which makes it easier

to implement in hardware.

8.6.2 Firewalls

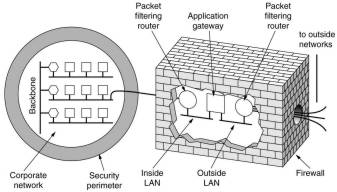

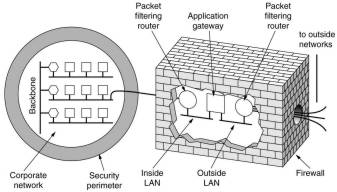

Each packet filter is a router with the extra possibility of

examining packets and filter out unwanted ones. This is usually

driven by tables listing sources and destinations that are

acceptable or not, and default rules about what to do with other

ones. In UNIX a source or destination consists of an IP number

(indicating the host) and a port, indicating the service: 23 for

Telnet, 79 for Finger or 119 for USENET news. A company could

block all incoming packets for all IP addresses with ports 19 and

79, no one outside can then log in via Telnet or look up people

using the Finger daemon. Blocking outgoing packets is trickier,

because sites are not forced to stick to the standard port naming

conventions and for some services, like FTP, certain port numbers

are assigned dynamically.

Usually the filter on the inside LAN checks outgoing packets

and the one on the outside LAN checks incoming packets. The reason

to use different LANs is to ensure that no packets go in or out

without passing through the application gateway. This

gateway works at the application level. A mail gateway, for

example, can be set up to examine each message going in or out and

transmit or discard it based on header fields, message size or

even the content.

8.6.3 - 8.6.4 not for this course

8.7 Authentication protocols

Authentication is the technique by which a process verifies that its communication partner is who it is supposed

to be and not an imposter. In face of a malicious, active intruder this is surprisingly difficult

and requires complex protocols based on cryptography. Besides message exchange between two parties, also a trusted

KDC (Key Distribution Center) may be involved. Most protocols establish a secret session key for

use in the upcoming data message exchanges. They are encrypted using this key, for performance reasons usually

with a symmetric-key method like AES or triple DES). The protocols themselves usually use public-key cryptography.

Authentication should not be confused with authorization which is the permission a person or process has to do something,

like deleting a file.

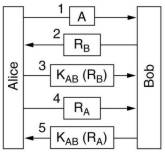

8.7.1 Authentication based on a shared secret key

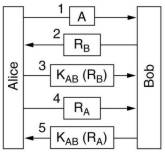

A challenge-response protocol, the challenge is a random number Ri, the response is its

encryption with the shared key KAB. The random number is taken from a large space to make it unlikely

that an intruder has seen it before. For the session key A can choose it and send it encrypted with KAB to B.

An active intruder can break-in this method under certain circumstances.

There exists variants of the above protocol, where an active intruder can not break-in, but they are much more complicated.

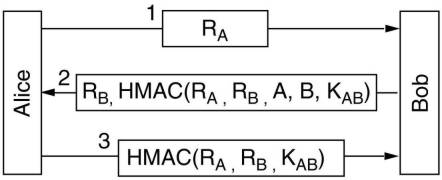

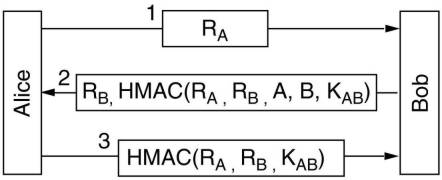

A different kind of protocols use a HMAC as used by IPsec. An active intruder can not break in here.

8.7.1 Establishing a shared secret key

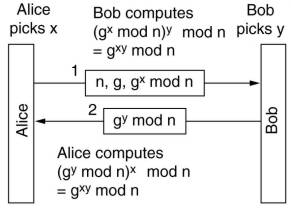

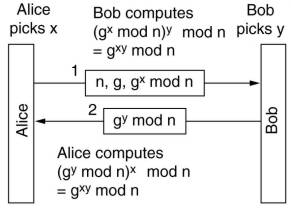

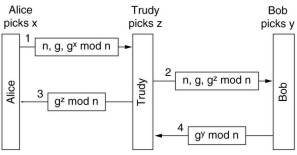

The Diffie-Hellman key exchange method is based on the fact that there are no practical ways to compute x given gx mod n.

This is so when n and (n-1)/2 are prime, also g has some conditions on it and n,g and x are large.

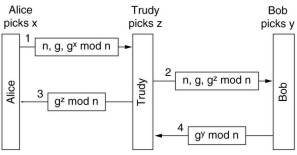

It can be broken by a man-in-the-middle (or bucket brigade) attack.

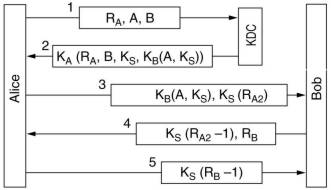

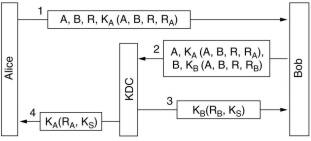

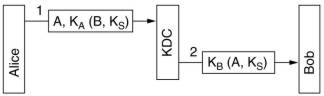

8.7.2 Authentication using a Key Distribution Center

Communicating with n others using n shared keys gives a key management and security problem.

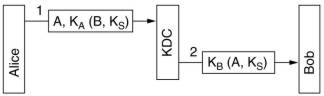

With a trusted KDC, each user shares a single key with the KDC and thus has less problems.

A KDC can be used in an authentication protocol, a simple method is shown here. KS is the

session key chosen by A, KA is the key shared by A and the KDC.

This simple method can be attacked using replay of messages. This can be guarded against using timestamps

and using more random numbers, called nonces, but then the protocol gets complicated.

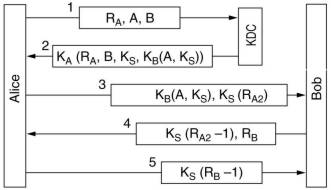

The Needham-Schroeder protocol uses a multiway challenge-response, of the many variants 1 is shown here.

Three nonces are used to guard against replays. If someone manage to get an old session key in plaintext,

then he can pretend to be A by replaying message 3. A variant of this protocol exists which solves this problem.

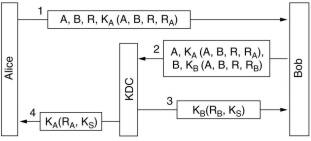

The Otway-Rees protocol, shown slightly simplified here, does not have this replay problem and it uses less messages.

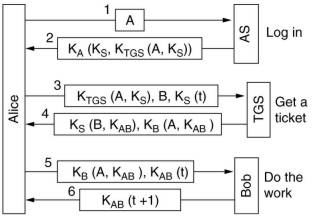

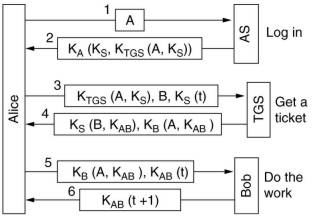

8.7.3 Authentication using Kerberos

Kerberos is used in many real systems, including Windows 2000. It was designed at MIT to allow network users

to access network resources in a secure way. The biggest difference from Needham-Schroeder is the assumption that

all clocks are fairly well synchronized.

The Authentication Server (AS) verifies users during login, it is similar to a KDC. After message 2 arrives,

the workstation of A(lice) asks A for her password to generate KA used to encrypt the information provided

by AS. Then password and KA are erased, so they are only a few milliseconds in the memory of the workstation.

Using KS and KTGS(A,KS) A asks the Ticket-Granting Server (TGS) for a ticket

,KB(A,KAB), and a shared key, KAB to access and communicate with server B. B can be

a file, compute or communication server. Authorization messages to TGS and B are timestamped to guard against

replay attacks.

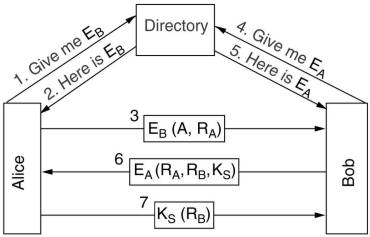

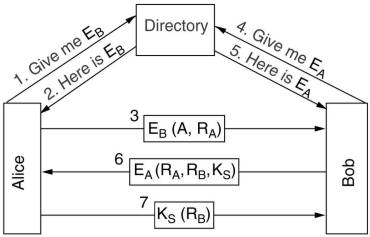

8.7.3 Authentication using public key cryptography

The directory contains certified public keys of many users.

8.8 E-mail security

8.8.1 PGP Pretty Good Privacy

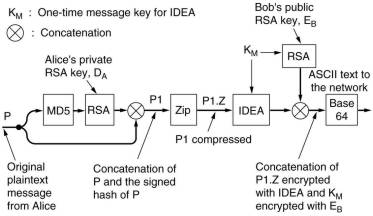

PGP is essential the development of one person, Phil Zimmermann.

It is a complete e-mail security package that provides security, authentication, digital signatures and compression,

all in a easy-to-use form. It is free, including the source code, and it is widely used on many platforms.

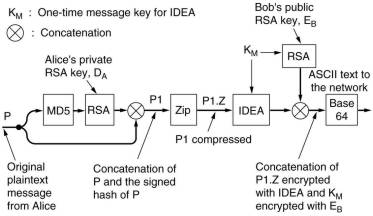

The plaintext is hashed using MD5 and then the resulting hash is encrypted using RSA with the private key of the sender.

The plaintext and the signed hash are then concatenated and compressed using ZIP, which uses the Ziv-Lempel algorithm.

Then the sender types some random input, both the content and the typing speeds are used to generate a 128-bit key.

This is used by the block cipher IDEA (International Data Encryption Algorithm)to encrypt the ZIP output.

The key itself is encrypted with RSA using the public key of the receiver. The two are concatenated and then converted

using Base-64 to ASCII characters

PGP is fast, the plaintext is encrypted using the fast IDEA with a once only key. RSA is used to encrypt twice a 128-bit key,

so a very long 2048 bit key can be chosen, although smaller ones are possible. It is also unbreakable, that's why the US government

tried to prevent its use. Special attention is paid to key management as that is the Achilles heel of all security systems.

Gewijzigd op 3 april 2003 door Theo

Schouten.